Storage management and configuration is one of the concepts that requires more attention in comparison to the other two resources (CPU and Memory). That’s because you are not able to categorize your CPU resources in terms of low-level features like L1/L2 cache capacity or do the same thing with memory in terms of bus speed, and the only option available to you is the number of vCPUs and memory capacity. On the other hand, when it comes to storage, you have way more options to provision one specific VM on the right storage. You might have variety of storage systems in your environment, one would probably have SSDs as an all flash storage and the other would have tiering technology in place, to name a few.

Before we dig into the details, let’s take a look at vSphere Storage Policy and its role in vRA. As you already know, there is a possibility of managing vSphere storage infrastructure with the use of VM Storage Policies. The methods used are dependent upon what storage system are being used and how they interact with ESXi hosts, features like VASA can play an important role here. Beside these capabilities, you can create tags attached to datastores and use it in your policy to place VMs on specific datastores. Now the question is, how does vSphere Storage Policy relate to storage configuration in vRA. Basically, in vRealize Automation, you manage storage through Storage Profiles, and one of the properties of a storage profile is vSphere storage policy. Now let’s see what constitutes a vRA Storage Profile.

Storage Profile Components

1. Disk Type

There are two categories of disk types in vRealize Automation:

- Standard Disk

- First Class Disk (FCD)

FCD is supported on vSphere only and is configured using API. FCD offers lifecycle management features which is independent of the VM it is attached to. For instance, you can create snapshot of an FCD which is independent of its parent VM. You can create, delete, resize, detach, attach an FCD as well as managing FCD snapshots. You can also convert an existing Standard Disk to FCD. For more information on FCD please visit VMware Documentation.

Standard Disks can be persistent or non-persistent. These terms are pretty similar to what we have in vSphere. To explain it in a simple way, when you delete a VM, it’s dependent and independent non-persistent disks are also deleted. In case the VM has an independent persistent disk, it’s not a candidate for the deletion and the disk remains intact. Standard Disks are not limited to vSphere.

AWS EBS which is a persistent volume and it’s not going to be deleted, in case of its parent VM deletion.

MS Azure VHD which is also a persistent disk but when it comes to deletion of the VM, you have the option to specify whether you need the disk or not.

GCP, Google Cloud also offers a persistent disk independent of its parent VM, and when the VM is deleted, its disks will be detached.

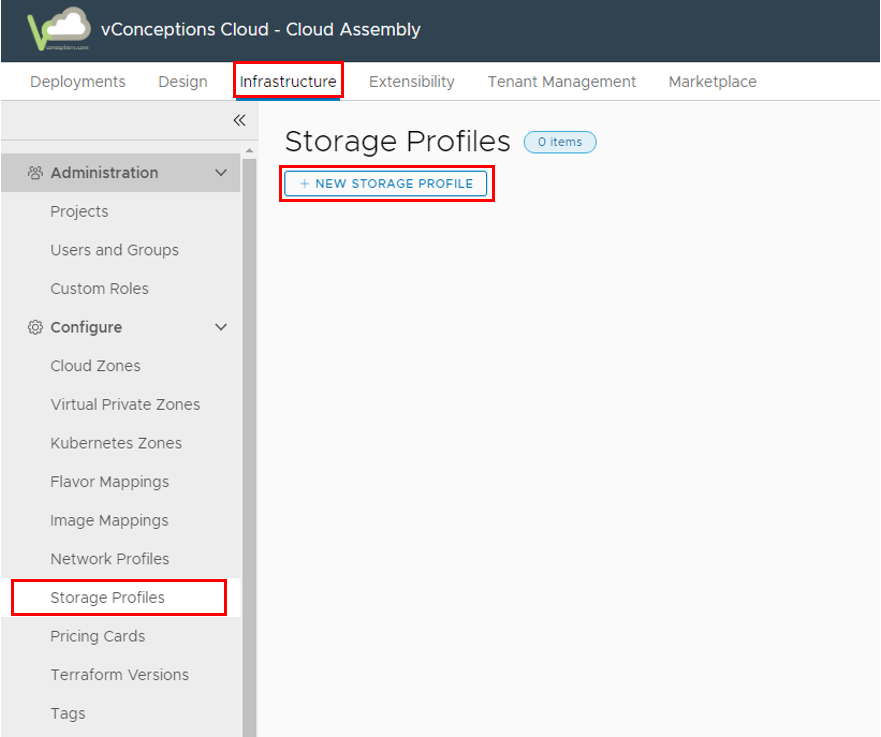

To create a storage profile, in Cloud Assembly, click Infrastructure tab and in the Storage Profile, hit the plus sign.

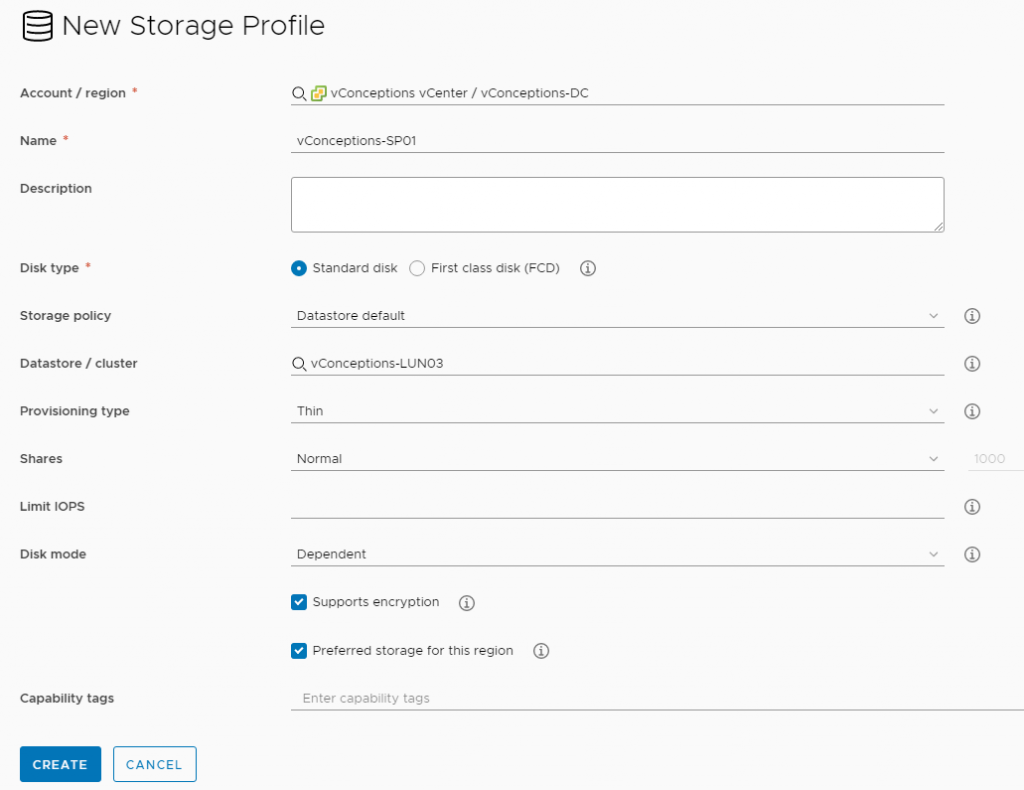

The wizard walks you through some settings.

- Give a name and description to the storage profile.

- Specify the Disk Type: Standard or FCD.

- choose a SPBM, here the default policy has been chosen.

- choose a datastore or datastore cluster, here a single datastore has been selected.

- Provisioning Type: you can choose between Thin, Thick, Eager Zeroed Tick options.

- Shares: you can specify a share value, so in case of contention those with relatively higher value receive more IOPS.

- Limit IOPS: You can also limit the IOPS, when nothing has been specified it will be considered as unlimited.

- Disk Mode: You can select between Dependent, Independent Persistent, and Independent non-persistent options.

- Supports Encryption: you can enable encryptions on disks provisioned.

- Preferred Storage for this region: if checked, vRA will use this storage profile when possible. for example if a constraint tag is used, it will override this option.

- Capability tags: tags assigned to this Storage profile, which can later on be used in blueprints.

Now hit Create button to finish.

To specify a VM to use one specific storage policy, you need to constrain the machine with a tag under storage in the YAML code. What I’m trying to say is that storage policies can not be attached directly to the VM but the individual disks. This gives you the ability to differentiate several disks with different storage policies so a certain level of performance will be met. Therefore, to wrap up you got two options:

- Specify a tag in VM properties in the YAML code which points to a storage policy, the consequent result is that all disks of the VM will be provisioned from the matched storage policy. here the point is that tags need to be unique so your desired storage policy is matched otherwise it selects one randomly.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

Cloud_vSphere_Machine_1: type: Cloud.vSphere.Machine properties: image: '${input.Image}' cpuCount: '${input.vCPUs}' totalMemoryMB: '${input.MemorySizeinMB}' attachedDisks: - source: '${resource.Cloud_vSphere_Disk_1.id}' networks: - network: '${resource.Cloud_vSphere_Network_1.id}' storage: constraints: - tag: SSD |

In the above example, the VM has been assigned with constraint tag of SSD.

2. specify the storage policy in individual disk property in the YAML code.

|

1 2 3 4 5 |

Cloud_vSphere_Disk_1: type: Cloud.vSphere.Disk properties: capacityGb: '${input.DiskSizeInGB}' storagePolicy: vConceptions-SP01 |

In the above example, one of the disks of the VM has been assigned a storage policy named vConceptions-SP01, the one we created before.

This concludes vRA storage provisioning and I hope this’s been informative for you.